How different are the expectations of experts and the general public for AI-based triage?

ARTICULATION of DISCOURSE | Oct.31,2022

- NAKAGAWA Hiroshi (Legal issues of AI ethics, AI agent, privacy, and digital legacy)

Team Leader of the Artificial Intelligence in Society Research Group, RIKEN Center for Advanced Intelligence Project (AIP)

While the novel coronavirus infection (COVID-19) was raging, medical facilities were under strain and hospitals were not able to accept all of the large number of infected patients who needed hospitalization. This forced those at the front line of health care to perform the sorting of patients who should be admitted, a process known as "triage." Although the triage related to COVID-19 may have been performed by staff members on the health care front line, such as those in public health centers, etc., based on their past experience. It is conceivable, however, that in a future pandemic, AI could be utilized to perform triage to prioritize those patients who should be hospitalized. Even in the absence of a pandemic, special medical facilities that are needed by patients with a serious illness will require the sorting of patients. Triage will also be necessary to prioritize organ transplants, which are chronically in short supply.

While the question of whether to use AI-based triage should be debated as an ethical issue, it would be useful for such debate to know what people think about AI-based triage today. For this reason, we conducted a social survey [1] by asking 544 medical professionals, including doctors and nurses, and 1,079 members of the general public, about their attitudes toward the use of AI-based triage.

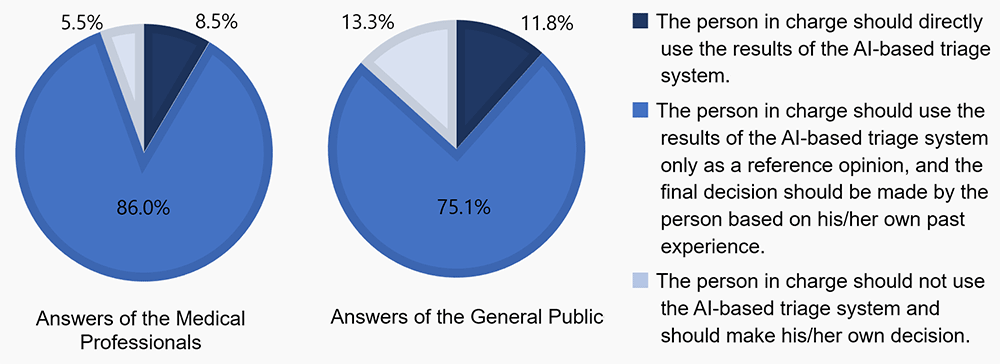

First, here are the results of the survey on the use of an AI-based triage system. Each of the medical professionals and the general public was asked to choose one of three options.

Unexpectedly, the general public was found to be more positive about "directly using the results of an AI-based triage system" than the medical professionals. This result may be an indication that the general public has strong expectations for AI. At the same time, there were nearly 2.5 times as many citizens as medical professionals who answered that the AI-based triage system should not be used. This indicates that there are still many citizens who do not completely trust AI, and those citizens suspect that AI is enigmatic. In other words, they think AI is a black box. On the other hand, it can be seen that the medical professionals, while willing to use the results of an AI-based triage system as a reference, would prefer to make the final decision themselves. This probably represents their pride as medical professionals.

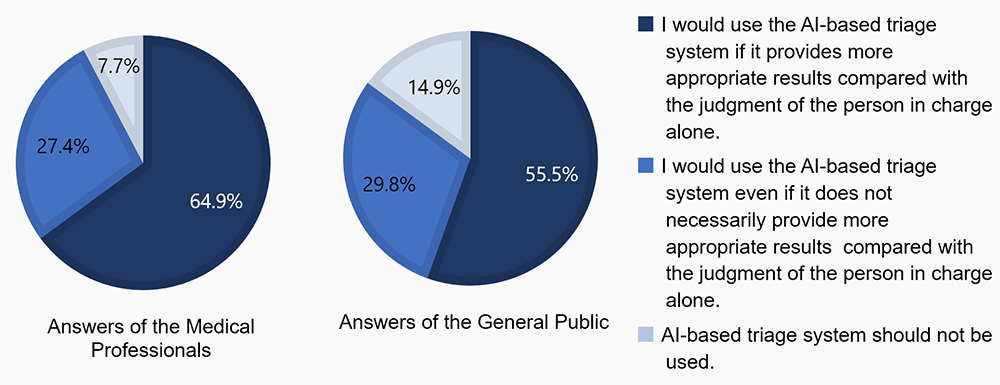

Next, the respondents were asked about their decision whether to use the AI-based triage system, taking into account the judgment of medical personnel and the performance of the AI-based triage system, and were asked to select one of the following three options.

It is natural that a higher percentage of both the medical professionals and the general public chose the option "I would use the AI-based triage system if it provides more appropriate results." The reason for the nearly 10% higher percentage of the medical professionals having this view is probably because experts tend to have a mindset that requires evidence for the performance of an AI-based triage system. That seems a natural characteristic of medical professionals. Regarding the second option, "I would use the AI-based triage system even if it does not necessarily provide more appropriate results," it was unexpected that a slightly higher percentage of the general public than the medical professionals chose this. It may be possible that although the general public has less understanding of the content of AI, they may have antecedent expectations for AI. On the flip side, twice as many citizens as medical professionals chose the third option, "AI-based triage should not be used." This is probably because the general public's lack of understanding of AI is tied to the psychology of not trusting or being unable to trust AI. These responses are consistent with the aforementioned survey results.

Summarizing the survey results, as the medical professionals can be assumed to have a deeper understanding of AI, they have fewer excessive expectations or outright rejection of AI, and their willingness to use AI is based on evidence of its performance, which is a typical attitude of experts. On the contrary, the analysis of the survey suggests that the general public seems to have less understanding of the content of AI than the medical professionals, which results in either excessive expectations or emotional rejection of AI by many members of the general public.

One way to address this situation is to study a technology called "explainable AI," which explains why AI obtained the results it did, but the explainability has not necessarily contributed to raising the level of citizens' understanding of AI. The "Social Principles of Human-Centric AI" [2] initiated by the Cabinet Office also proposes the direction of not only trying to solve this issue by AI technology alone, but also by improving citizens' AI literacy so that all citizens can use AI.

AI will be increasingly used in many aspects of social life in the future, but excessive expectations and emotional rejection of AI as seen in this example should be avoided. The results of this survey suggest that while there are differences in their views of AI between medical professionals and the general public, these differences seem relatively small. It would, therefore, be fully possible for the general public to acquire basic AI literacy. In light of this, it is necessary to provide opportunities to learn about AI, taking into account such differences in literacy. For example, people, regardless of whether expert or not, should have a sincere dialogue on "trust" in AI. I believe we are now in an era in which experts should conduct such outreach activities as one of their social roles, thereby, ensuring all people can have a certain level of knowledge about AI's capabilities.

[1] JST-RISTEX "Human-Information Technology Ecosystem" R&D Focus Area "PATH-AI: Mapping an Intercultural Path to Privacy, Agency, and Trust in Human-AI Ecosystem" (Principal Investigator: NAKAGAWA Hiroshi, Grant Number: JPMJRX19H") FY2022 survey results

[2] Cabinet Office "Social Principles of Human-Centric AI" (Council for Integrated Innovation Strategy on March 29, 2019)