Progress Report

Development of “Jizai Hon-yaku-ki (At-will Translator)” connecting various minds based on brain and body functions[3] Functions of Jizai Hon-yaku-ki

Progress until FY2024

1. Outline of the project

In R&D Item 3, we aim to develop Jizai Hon-yaku-ki itself and, specifically, its key functions necessary to support our everyday interactions.

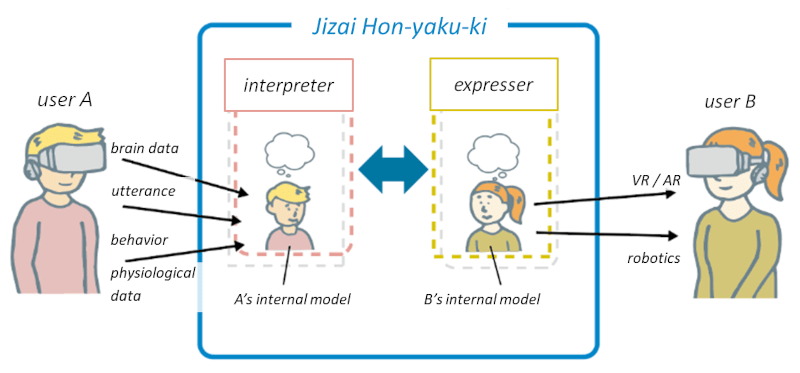

Jizai Hon-yaku-ki consists of two components: an interpreter “reads” the user’s mental state and an expresser “conveys” it to another user.

The primary task of this R&D Item is to develop the two parts with sensitivity to the diversity of contexts and our personalities, so that Jizai Hon-yaku-ki can assist our mundane communication.

2. Outcome so far

- Developed a VR system that allows conversations with others in a comfortable environment

- Developed a system to share visual perspectives with others

- Developed a framework for visualizing the user's mental state from biosignals

- Discovered speech indicators that can provide clues to the speaker's emotions

- Developed a method to quantify conversation features using large-scale language models

Outcome 1: We developed a VR conversation system that provides a comfortable conversation environment by freely changing the appearance and richness of expressions, and demonstrated it to people with ASD. We will use the experiential feedback from participants to develop a prototype of Jizai Hon-yaku-ki.

Image provided by Masahiko Inami (U Tokyo)

Outcome 2: We developed a system called "Lived Montage" that uses a head-mounted display to allow users to experience seeing things from another person's point of view, and held a workshop on it. Sharing a view is useful as a communication support function, and it has become clear that seeing the same thing as another person or sharing a view in sync with their heartbeat has a significant effect on the user's mental state.

Image provided by Hiroto Saito (U Tokyo)

Outcome 3: We have developed a framework that visualizes the user's mental state on a two-dimensional plane based on biosignals (facial expressions, speech, heart rate, and EEG signals). This is an outcome that will lead to the development of a prototype of Jizai Ho-nyaku-ki.

Outcome 4: Analysis of speech data has shown that temporal changes in voice stress can be one of the cues for sensing a speaker's emotions. This finding will be useful in developing devices that can better understand the words of others.

Outcome 5: We developed a method to quantify conversation features using a LLM, and constructed an AI model that uses the extracted features to distinguish between ASD and Neurotypical individuals. This will be useful for developing an interpreter that reflects the diversity of individuality and context.

3. Future plans

We will continue developing an interpreter and an expresser that are sensitive to contexts and personalities.

In parallel, we attempt to develop a proof-of-concept product of Jizai Hon-yaku-ki by incorporating the findings from the other five R&D Items.

(U Tokyo: Y. Nagai, M. Inami, H. Saito,