Progress Report

Smart Robot that is Close to One Person for a Lifetime[2] AI systems for smart robots

Progress until FY2024

1. Outline of the project

Moravec's paradox is a contradiction that tasks that are easy for humans are difficult for artificial intelligence and robots. This issue has become more pronounced with the recent development of large language models (LLMs). Leading AI companies, including Google DeepMind, OpenAI, and Tesla, have turned their attention to "(humanoid) robots" as the next target for LLMs, significantly expanding this field in 2023.

This study aims to realize robot intelligence that supports human manual labor, especially housework, by utilizing a unique approach called "deep prediction learning" based on the knowledge of neuroscience. Deep prediction learning is a framework that applies deep learning technology to predict high-dimensional changes in sensation and motion in real time and minimize prediction error. This method has already been used to handle clothes and food and move around the house.

2. Outcome so far

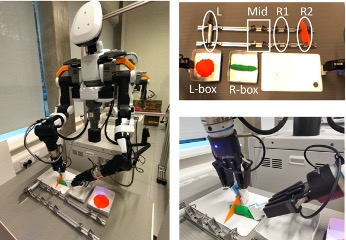

We conducted several motion learning studies using the human-collaborative robot Dry-AIREC developed in our project, as well as other robots. Here are some examples:

1) Learning multiple actions in cooking:

In order for a robot to process multiple tasks in a sequential manner, we proposed a learning system that utilizes multiple deep learning models that have a "latent space" that represents the features of objects. The evaluation targets were the series of cooking tasks of pouring pasta or soup and stirring, and it was confirmed that the actions of pouring and stirring could be performed consecutively.

2) Research on speeding up operations:

We proposed a method to collect high-quality data over a long period of time, and then when the robot actually operates, the model performs inference several times faster than the real world, thereby achieving high-speed operation. By using this method to make inferences in the model three times faster during the teaching stage, we confirmed that an actual robot could stack randomly placed cups quickly and with an average success rate of 94%.

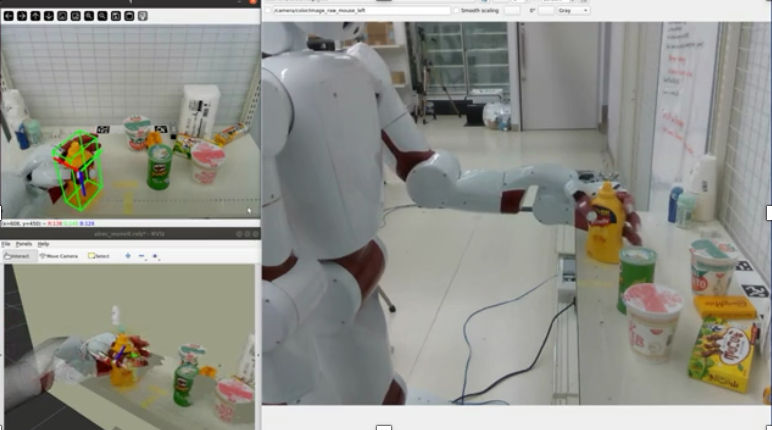

3) Object Grasping in Diverse Environments:

A demonstration of AIREC's multiple products picking technology based on visual force distribution estimation was conducted in a simulated convenience store environment at the National Institute of Advanced Industrial Science and Technology. The robot was able to continue the demonstration autonomously even in the presence of multiple people, and the success rate of the picking operation was confirmed to be over 85%.

4) Basic model construction of smart robots for transfer learning:

Aiming to build a robot basic model that can improve the efficiency of the task acquisition process for AI robots, we have produced a simplified dual-arm mobile manipulator, AIREC-Basic, and are developing and verifying a basic model for acquiring difficult tasks, such as picking up laundry from a drum-type washing machine.

3. Future plans

Starting in 2024, the University of Edinburgh in the UK, one of the world's leading AI institutions, will join us and conduct research into hierarchical motion planning for long-term tasks. We will implement this technology in Dry-AIREC and proceed with technical verification, while also disseminating the results internationally. Hitachi, Ltd. will also join the project from fiscal year 2024 and is conducting research into building a basic model construction of smart robots using AIREC-Basic. In the near future, we plan to learn and demonstrate a variety of tasks in order to put the technology into practical use in society.