Cognitive development and sensorimotor learning based on embodiment.

Cognitive development based on embodiment is one of main issue of our project.

Toward the the issue from body movements to human interaction,we have studied sensorimotor information analysis technique, coupled chaos motor emergence model, affordance acquisition model, mirror neuron system by spiking neural network model, agency model, observation of human behavior.These studies connect to study of Verbal imitation and empathy development seamlessly.They are integrated to design principle of cognitive developmental robot and understanding human development from the perspective of “Cognitive model mediating from embodied behaviors to human communication.”

Sensorimotor information analysis

There are few analyses to find internal structures in sensorimotor data because there is no appropriate technique whereas many researchers had thought that embodiment play an important roll for sensorimotor learning.For information structure analysis in sensorimotor flow from simulated agents, we proposed to use Mutual information, Transfer entropy which is information index of causal relationship, Wavelet bifurcation diagram method which analyze associative structure in high dimensional system [Pitti2008][Pitti2006] and Hierarchical transfer entropy[Lungarella2006]

We analyze coupled chaos walking simulation to find the information flow within coupled system of chaos oscillators and a compass robot. We find that information flows from body to chaotic controller is bigger than the opponent flow by analyzing the system. This result indicates that the system generate walking movements to exploit body dynamics [Pitti2007].

Fig.1 Coupled system with compass robot and chaos oscillators.

Fig.2 The information flow between a compass robot and chaos oscillators.

We proposed developmental hypothesis of acquisition of behavior units which are so called motor primitives. The hypothesis is validated computationally by the baby musculoskeletal model which acquired some motor primitives through interaction with the surrounding environment and exploited them [Mori2007].

Motor acquisition by body-chaos coupled system.

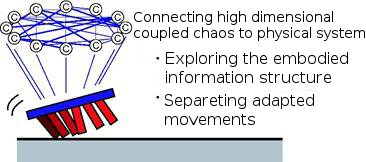

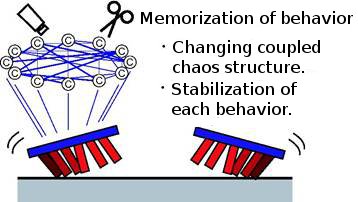

We proposed a behavior acquisition model (Fig.3, Fig.4, Fig.5). The model is based on coupled chaos with internal connection learned by movement correlation. The model has adaptability and objective directness. The chaos model give a basic principle of motor development in fetuses and infants’s spinobulbar system model.

Fig3. body chaos coupling model..

Fig.4 model for motor acquisition with chaos system.

Fig.5 acquired movements by the model.

Mother infant tactile interaction through holding We measured tactile information in holding interactions between mothers and infants by tactile sensor suits which is developed by our project. The measurement is the first trial to measure holding relationship scientifically. In the experiment, mothers held pillow, baby doll, infant with tactile sensor suits. We analyzed the data by statistical techniques Discriminant Analysis and Principle Component Analysis, and find an adequate difference between mothers and students by the data analysis.

Fig.6 The tactile sensor suits for babies.

Fig.7 The tactile sensor suits for mothers.

Fig.8 The mother-infant interaction experiment and the data.

Fig.9 The result of Discriminant Analysis for holding data with mothers or students.

The result of Principle Component Analysis for holding experiment.

Acquisition of spacial concept in VIP neuron

A concept of space is the most basic environmental information to recognize self-body from the perspective of relationship between the body and the environment. We proposed a self-organizing spacial representation model which recognizes consistent positions in head centric coordination from information of retinal position and eye gaze, and the self-organization is helped by hand regards.

Based on neuron model of the head centric coordination, we constructed a model which resembles VIP neuron in a human brain actually to integrate information when hands touch the head in self-organizing manner [Fuke2008].

Fig.11 A spacial concept acquisition model.

Fig.12 Appearance of acquisition of a spacial concept.

Acquisition of facial representation

Infants seem to have body representation of own face in very early life to generate infant imitations. Especially, there are some interest issues in body representation research.

(a) fetuses and infants cannot acquire directly facial information from vision while body representation should be multi-modal representation integrated from tactile, vision, and somatic sensation.

(b)How to recognize facial perts including mouse, eye, and nose.

(a) is a problem how to integrate invisible visual information to visible visual information. We proposed a model to recognize hand position from joint angle by learning relationship between joint change and visual position change in neural networks while hands move in visual fields. For (b), it is necessary that the robot detects salient parts including eyes and a nose from sensory information,

while the sensor position are not calibrated.So we proposed the model extracting salient tactile information based on the discontinuousness of multiple sensory information on the surface of the face. Moreover, the model connects extracted own face information to salient visual information of other’s face, so that the model induces imitation of a other’s face [Fuke2007][Fuke2007][Fuke2007].

Fig.13 Acquisition of facial representation.

Tool use

We modeled a tool use model from the perspective of finding self-body and constructing body representation. In neuroscience, scientists found that some neurons in the monkey’s interparietal sulcus are activated, when the monkey pays attention to an own body part visually. To explain the phenomena, we proposed a model to acquire cross modal body representation integrated with visual and somatic information triggered by tactile sensation. We implemented the model on CB2, and the CB2 resembled the behavior in the experiment by the monkey [Ogino2009][Hikita2008][Hikita2008].

Fig.14 Body recognition and a tool use experiment.

Fig.15 Body recognition model.

Also, we proposed a model that generates behaviors to handle tools in appropriate order, thereby we focused on recurrent structure of process in tool use situations, and describe the order of movement by recurrent structure of combination of tools and objects. The model is based on Restricted Boltzmann Machine as a associative neural network, and learn the recurrent structures of tool use in hierarchical network. The result of the simulation experiment indicates that the model can generate joint angles for appropriate reaching by the recurrent structures to choose appropriate meta status to the station after learning.

Fig.16 Appropriate status are chosen and the corresponding motor commands are supplied to lower hierarchy.

Mirror Neuron System

Mirror Neuron System (MNS) seems to be based on empathy. We proposed a model constructs MNS in self-organizing manner.

The model consists of spiking neural network and the connectivities of the network are modified by Spike Timing Dependent Plasticity (STDP). We conducted a grasping experiment smiler to the experiment in which the MNS was found. In the experiment, the network received visual and tactile information from camera and tactile sensors on the surface of an object. The result indicated that the network resembles the MNS by which visual signal reconstruct from tactile information without visual information [Pitti2009].

Fig.17 Am appearance of the experiments of grasping with the visual and tactile integration network.

Agency model

Human and Chimpanzees can recognize self reflection in a mirror as their own bodies.Feeling of agency needs integration of afferent motor command and efferent sensory information at least.We proposed agency index for a spiking neural network, and applied it to a robot head.The network generates motor command received visual input and efference copy of motor command. After sufficient time, the network dose not predict only visual input from motor information but also motor information form visual information. Moreover, when the robot saw a mirror, agency index increased [Pitti2008]. This situation is similar to Mirror test for infants and animals.