Verbal imitation and empathy development.

We have studied verbal, language and empathy development as integrative issue of our group’s theme.

Verbal imitation

Verbal imitation development is important element of cognitive development for social environment in early communication. First of all, we proposed synthetic model of infant’s utterance development to realize mutual imitation between human and robot [Miura2007][Miura2006][Miura2006][Miura2007]. The result indicated that the incompleteness of imitations by caregivers induces natural infants’ utterances in the mutual imitation.

On the other hand, it is important that the basic frequency of speaking can be changed for Matherese for more natural mutual imitation between a caregiver and an infant.We designed equivalent structure to human’s vocal band and constructed the new robot.The robot won Good Design Prize 2008 in Japan.

The model was improved for the guidance effect by the imitation, that is, caregivers and infants do not have only the caregiver’s and infant’s the perceptual and motor limitation and the vocal organ’s dynamics (perceptual and motor magnet bias) but also expectation to be imitated by opponents.We call it as Auto-mirroring bias.We conducted experiments that validate the guidance effect[Ishihara2009][Ishihara2008].We also proposed effective imitation learning model even when caregivers do not imitate infants frequently.

Fig.1 The mechanism of utterances.

Fig.2 Vocal robot emphasizing visual image for the articulation.

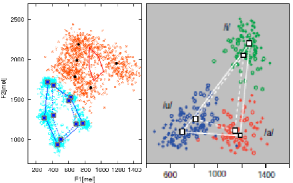

Finally, we tried to model infants’ vowel acquisition process as mutual imitation process between a caregiver and an infant. The model has a caregiver’s perceptual and motor magnet bias and auto-mirroring bias, infants’ change of vocal ability along with age, extension of caregiver’s expectation for infants’ vocal spectrum. We simulated the model to validate the computational consistency of the model (Fig.3). The result indicated that the model is regarded to induce Matherese as caregiver’s speaking spectrum was extended comparing to the result without the new model.

Fig.3 Resembled process of acquisition of vowel sounds.

Fig.4 Comparison between resembled utterance feature by our model and expansion of vowel as Matherese [Kuhl et al. 1997]

Acquisition of onomatopoeias

Toward human interaction from body movement, we proposed a onomatopoeias acquisition model by multiple modality integration as primitive language acquisition.

The model extracts self-movements corresponding to onomatopoeias from redundant auditory signnal,

based on rhythmic synchronization.

Fig.5 Onomatopoeias acquisition model.

Fig.6 The result of onomatopoeias acquisition model.

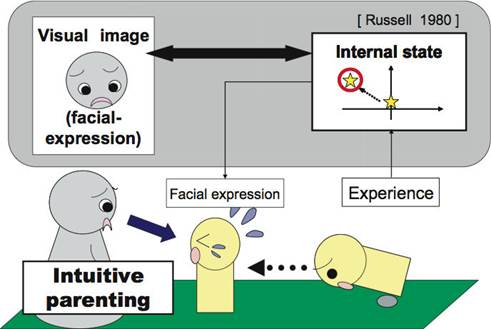

Acquisition of emotional mapping by communication and intuitive parenting

Human respond to babies unconsciously, which is called as intuitive parenting. The intuitive parenting is that one facilitate an infant to learn one’s experiment and feeling, and teach a representation of the feeling to the infant. The intuitive parenting make infants to connect between status from an experience and facial expression strongly. We proposed a model which connects the other’s facial expression and the own and other’s emotion based on the emotion dynamics model and the intuitive parenting behavior. After learning phase, robot can recognize categories of facial expression in an internal state space, predict emotional state from other’s face, and generate appropriate facial expression to represent empathy to the other.

Fig.7 Situation of intuitive parenting.

Fig.8 The behavior of intuitive parenting model.

Acquisition of a primitive communication behavior (peek-a-boo)

We proposed a communication model for very early period of infant. Developmental science suggest that infants become sensitive to consistent behavior from caregiver and learn contingency of caregiver’s movement timing at 4 months. We focused on peek-a-boo for example of communication facilitated by intuitive parenting. The model consists of dopamine neuron in basal ganglia and interaction between hippocampus and amygdala. It memorize caregiver’s behaviors triggered by own emotion, and predict the behaviors based on the memories. We implemented the model to a virtual robot, and conducted experiments in which a human subject interacts with the robot. The result indicated that the behaviors of the robot resembled the peek-a-boo behavior of human infant. In the experiment, the model was only surprised to caregiver’s behaviors when the memory module was not activated sufficiently at first. After the model got memories of caregiver’s behavior, peek-a-boo activate the model’s pleasure index based on the prediction. The model suggest that the mechanism of development of emotion through an interaction between a caregiver and an 4 months infant

[Ogino2007].

Fig.9 Early communication acquisition model.

Fig.11 An experiment of peek-a-boo.

Acquisition of eye contacts (Autism training model)

Autistic children has difficulty of communication because of weakness of attention to other’s eyes. In autism therapy, therapists conduct training of attention of eye contacts for them. We proposed a model for the training to acquire ability of eye contacts. The model extract information from vision sensor to predict the value function the most correctly in communication. The learning process facilitates eye contacts eventually. The system consist of visual processing system and learning system. Visual processing system computes visual attention from bottom up process and top down process. Bottom up process select candidates of attention points based on visual saliency. Top down process select candidates of attention points based on image feature learned by learning system. The learning system memorize images around the time when the robot get values, and extract common features for memorized images. We implemented the model to virtual robots, and conducted interaction experiments with human subjects.

The result shows the attention of the robot moves from face to eyes. It is very similar to the process in an autism therapy[Watanabe2008].

Fig.12 Eye contact acquisition model.

Fig.13 An experiment of the eye contact acquisition model.

Co-creation of interactive play rule

We proposed a model for emergence of interaction rule in physical communication between a caregiver and an infant. There is a research discussing that human’s interactive play progress divided into observation, imitation, rule understanding, and play change. Our model formulated the interactive play computationally as a communication of statistical information and finding the statistical consistency. We implemented the model to virtual agent and conducted the interactive play experiments with human subjects. The result indicated that rules emerge from interaction between a subject and the virtual robot without an explicit trigger[Kuriyama2008].

Fig.14 A scenario of a progress of an interactive play [Rome-Flanders95].

Fig.15 Emergence of interactive play rule.

Fig.16 The appearance of interactive play experiment.

Fig.17 The result of the interactive play experiment

.