Progress Report

Cybernetic Avatar Technology and Social System Design for Harmonious Co-experience and Collective Ability2. Parallelization of experience and integrated cognitive behavioral technology (Parallel Agency Research Group)

Progress until FY2022

1. Outline of the project

A body that can perceive

and act in different spaces

at the same time

by parallelizing its own

physical experiences.

To transcend the one-person-one-body notion when using a cybernetic avatar (CA), we aim to create technology that allows seamless transitions between multiple CAs. This technology will merge perceptions from different bodies, ensuring a consistent sense of self and bodily subjectivity. Additionally, it will preserve and share emotions, movements, and sensations, pushing towards liberation from bodily, spatial, and temporal limitations though the use of CAs.

Development of technologies for integrated agency in parallel embodiment.

Shunichi KASAHARA (Sony CSL)

Understanding the neuroplasticity mechanisms of adaptation to co-creation action technology

Kazuhisa SHIBATA (RIKEN CBS)

Development of emotion digitalization and experience compression technology based on physiological sensing.

Kai KUNZE (Keio Media Design)

2. Outcome so far

- (1) Development of Parallel Ping-Pong CA System

- (2) Development of a fast-switching method for parallel operation of multiple CA bodies

- (3) Realization of a parallel CA operation learning method that uses multiple CA bodies simultaneously.

- (4) Morphing identity system that continuously changes faces and clarifies the boundaries of facial identity on CA

- (5) Development of Frisson waves to measure and share emotional changes faces to clarify the boundaries of facial identity on CA

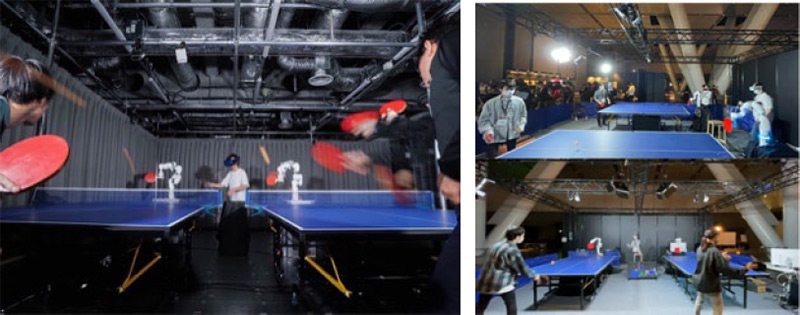

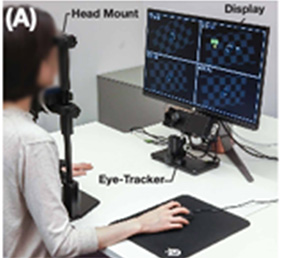

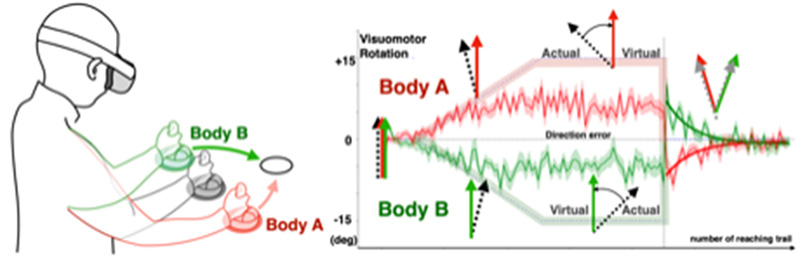

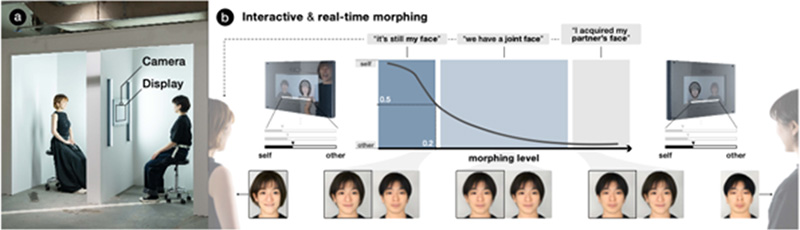

To verify the feasibility of the experience of one user operating multiple bodies simultaneously through the CA, in (1) we designed a parallelized CA focused on table tennis, enabling a single user to control two CAs simultaneously playing on different tables. The viewpoint images from both CAs were merged and presented to the user. In (2) we introduced an interface that rapidly switches between multiple CAs using gaze information, achieving faster outcomes in a task involving four parallel spatial coordinates. As part of (3) we explored parallel motion learning in virtual space using CA bodies, identifying how different motion traits can be simultaneously learned via a third-person perspective. In (4) a machine-learning-driven system was created to enable seamless facial transformations on the CA, ensuring continuity when managing the features of multiple CAs. This was tested in a series of public experiments to define the boundary between one’s own identity and others using facial expressions. In (5) we developed emotion estimation and induction technologies, as a way to facilitate shared emotional experiences among multiple individuals during music performances.

3. Future plans

We will simultaneously pursue two goals: the development of interface technologies for seamlessly embodying multiple CAs, and foundational neuroscience research to elucidate how the human brain adapts to parallelized bodily sense. We also aim to measure and analyze biometric signals and strive to realize the compression and transmission of experiences based on emotional information.