Research Results

Everything around us can be a computer

Realization of a future type information environment FY2017

- Masatoshi Ishikawa (Professor, Graduate School of Information Science and Technology, The University of Tokyo)

- CREST

- Creation of Human-Harmonized Information Technology for Convivial Society "The construction of harmonized dynamic information space based on high-speed sensor technology" Principal Researcher (2009-2015)

"A palm" visual tactile display

The world is now rapidly shifting toward an advanced information oriented society due to the introduction of information communication technologies such as the Internet and smartphones. However, even though computers and smartphones have advanced and prevailed, further improvement is desired. For example, some electronic devices are not performing speedily because many functions are embedded. Plus, there is not a lot of flexibility for displays and input devices.

Therefore, the research group of Professor Masatoshi Ishikawa, principal researcher of CREST, largely changed their way of thinking regarding pre-existing computers and smartphones in order to develop a new system. It is a system which rapidly tracks an object (a human palm, a sheet of paper, a ball, etc.) that is moving in a space without binding its movement, and it projects videos and produces tactile stimulation onto the object without delay. If this system is used, everything around us can be transformed into a computer.

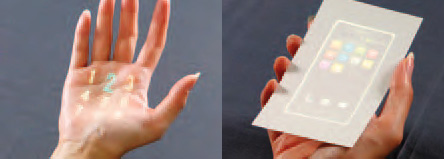

A picture displayed on moving paper (right), and a picture displayed on a moving palm (the tactile stimulation is also displayed).

Tracking objects quickly and presenting tactile stimulation

This is a new system which integrates two subsystems. Firstly, "1ms Auto Pan/Tilt system;" It can track a moving object quickly and accurately. The research group developed this high speed image processing equipped with "high-speed vision," which can extract a position of a moving object every 2ms (0.002 seconds), and a "high-speed gaze control device," which uses two mirrors. By using these technologies, the direction of the mirrors can be maneuvered up and down and left and right (pan/tilt) so that the object is positioned on the center of the screen just as an autofocus device automatically focuses on its target.

"1ms pan/Tilt system" and "Array of tactile display oscillators"

By connecting a projector in the same direction and angle as to this optical system, it becomes possible to project videos on objects that are moving at high speed. As for the performance, it has a range of 60 degrees of pan/tilt at the most, and 40 degrees of the visual line direction change can be made in 3.5ms (0.0035 seconds).

On the other hand, the "non-contact tactile display" uses an array of ultrasonic wave oscillators which creates tactile sensation on an object (for example, "a palm") through radiation pressure of aerial ultrasonic waves. This was developed by the same research as well. The current system can present 7.4 g-force (roughly the same weight as what would be felt when holding a 500 yen coin) at the most, focusing on a spot with approximately 1 cm diameter. In addition, the system can vary the degree of the force or vibration pattern by 1ms unit, and it can also move spotting positions at a high speed on the skin.

By integrating the"1ms auto pan/tilt system"and the "non-contact tactile display,"we configured a system that can project information that was conventionally displayed on screens onto moving objects without positional displacement, together with tactile stimulation. In this sense, this system can be regarded as a moving object version and a tactile presentation version of projection mapping technology.

Overview of experimental system

Operating aerial images by hand with the "next generation 3D display"

Additionally, the research group formed aerial images that are observable from a wide viewing area, and developed a next generation 3D display called "AIRR (Aerial Imaging by Retro-Reflection) Tablet" that is operatable by hand. This display integrates the "AIRR display technology" that forms a large aerial image with a wide viewing angle by use of retro-reflection sheeting, which possesses the property of reflecting light to the direction of incidence, with the"high-speed 3D gesture recognition"that recognizes the position and movement of an object (a fingertip etc.) within every 2ms using "1ms auto pan/tilt system" to make operation of aerial images possible. Not only can aerial images be expanded and rotated using both hands, but they can also be made responsive to fast movements like punching. Thus, the developed system demonstrates a future information environment in which aerial images are operatable by gestures.

Embedding information into existing objects and environments

Most of the research on current image processing and human interfaces is aimed at the "realization of human- like movement," whereas the research group of Professor Ishikawa is aimed at "visual and tactile sense that exceeds human ability." When a human sees something and makes a decision to act, information transmission of "eye → brain → movement of his/her body" is needed, which takes approximately 60ms The system of Professor Ishikawa, at el. achieved overwhelmingly high-speed processing of"a high-speed vision sensor → information process by computer → a high-speed servomotor, "exceeding the limitations of the speed of human perception ability.

That this system embeds information onto/into existing objects/environments themselves, including moving objects such as a human palm or paper as well as empty space, to make intuitive operation possible is a totally new paradigm, while pre-existing information devices embed intelligent functions into objects such as tablets. It points the way toward future dramatic changes in our information environment. The information world and the physical world can become one in the future.