Progress Report

Construction of an AIoT-based universal emotional state space and evaluation of well-/ill-being states[1] Constructing a Human Emotional State Space by AIoT

Progress until FY2024

1. Outline of the project

In this project, we aim to develop AIoT (AI×IoT) technology that enables the objective estimation of emotional states in daily life on a cloud platform using multidimensional psycho-physiological data (e.g., voice data, physical activity, heart rate, respiratory rate, and recording situations) measured by IoT devices. The project is divided into the following three research topics:

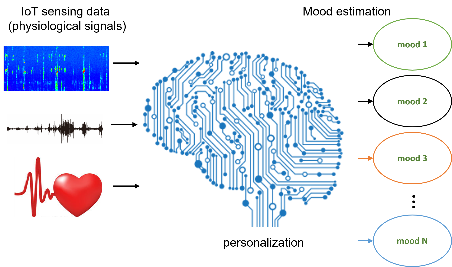

- 1. Development of human emotion estimation technology with clinical validity using multidimensional psycho-physiological data

- Summary: we develop technology with clinical validity that enables the objective estimation of various emotional states on a cloud platform. This is achieved by utilizing multidimensional psycho-physiological data (e.g., voice data, physical activity, heart rate, and respiratory rate) measured by IoT devices in daily life.

- 2. Assessment of psycho-physiological data in patients

- Summary: we collect psycho-physiological data from patients with mental disorders to construct a human emotional state space with clinical validity.

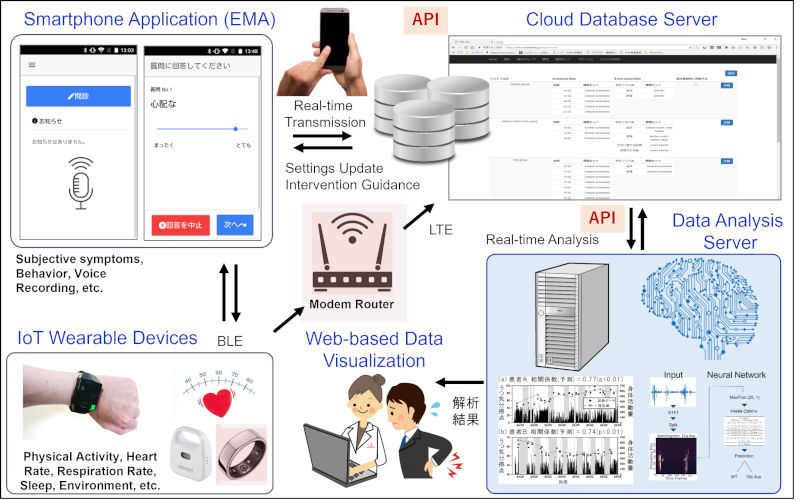

- 3. Development of a translational IoT cloud system

- Summary: we develop a cloud-based IoT system capable of acquiring continuous and real-time psycho-physiological data from both humans and animals (mice and rats) in real-world settings on a large scale.

2. Outcome so far

To develop human emotion estimation technology, we constructed machine learning models that estimate self-reported emotion scores recorded in daily life from simultaneously measured physiological signals (Figure 1).

[Main Achievements by FY2023]

- Estimated scores for four emotions (depressed mood, anxiety, positive mood, and negative mood) from spontaneous physical activity (SPA) data, with an error margin of approximately 20%.

- Estimated the levels (high/low) of four emotions from SPA data with an accuracy exceeding 70%.

- Estimated nine emotions (“vigorous,” “gloomy,” “concerned,” “happy,” “unpleasant,” “anxious,” “cheerful,” “depressed,” and “worried”) from voice data with an average concordance correlation coefficient of 0.55 and a maximum of 0.61.

- Developed a multimodal machine learning model utilizing multiple physiological signals (voice and physical activity), thereby achieving improved accuracy in emotion estimation.

- Established integration between an originally developed IoT cloud system and a ring-type wearable device aimed at "subtle" sensing, and conducted a proof-of-concept study targeting residents of a dementia care unit within an assisted living facility.

- Extended the IoT cloud system for animal use and developed wearable sensors specifically designed for mice.

[Main Achievements in FY2024]

We developed an emotion estimation model using a Japanese speech corpus (nine emotions simulated by 100 professional voice actors) and evaluated its accuracy, applicability to multilingual data, and geometrical relationships among emotions. Additionally, using patients' speech data, we developed a preliminary model capable of estimating six emotions and symptoms, achieving concordance correlation coefficients ranging from 0.451–0.554. Moreover, we established an integrated IoT cloud system with unobtrusive sensing technologies and animal-monitoring devices, which is now progressing toward demonstration and societal implementation. Further, we obtained data from patients with mood disorders, anxiety disorders, and alexithymia, amounting to the equivalent of 104 subjects based on a two-week assessment period.

3. Future plans

We aim to establish clinically valid AIoT technologies capable of objectively estimating intra-day emotional states and their fluctuations by utilizing various emotions and multiple psycho-physiological signals (such as voice, continuous physical activity, heart rate, respiratory rate, and measurement context) collected through wearable and IoT devices in daily life environments, and to further develop their business applications.

(NAKAMURA Toru: The University of Osaka

YAMAMOTO Yoshiharu: The University of Tokyo

YOSHIUCHI Kazuhiro: The University of Tokyo)