Constructing and testing hypothesis on development through experiment with robots

Self-other distinction of a robot with whole body tactile sensors

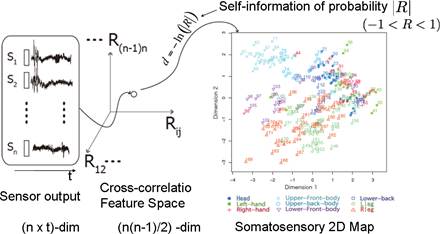

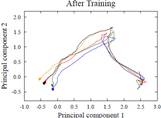

Since CB2 is covered by soft skin that has few junctions, many tactile sensors embedded in it are activated according to its own movement. Therefore, it has to distinguish tactile information into self-induced and other-induced ones. This is an issue deeply related to the mechanism of self-other distinctions in human children. In our group, not only self-induced sensorimotor experiences but also experiences of the interaction with others are considered to influence the process of self-other distinctions. Assuming that the time series of data of full body skin sensors during tactile experiences are collected, we proposed a learning method for classifying the time series of sensory responses into self-induced or other-induced ones by extending the boosting algorithm. We defined somatosensory map based on the co-occurrences of tactile sensors during being touched by others, which is the two dimensional plane on which each sensor is displaced based on the similarities of correlation (see Figure 1) [Noda2010] We compared the performances of the classifiers constructed on the space of somatosensory map and physical one of the tactile sensors. We found that one constructed on the somatosensory map showed the superior performances.

Fig.1 somatosensory map that CB2 acquired through the interaction with environment.

To classify self-induced and other-induced experiences, effective actions should be selected. We considered that highly reproducible actions is suitable for it, which induces statistical bias into the sensory data. Through the experiments with CB2, we found that noise on the sensors and stability in motion control, and the balance of them are influential for the motion stability.

Learning of whole body movement based on biased random walk

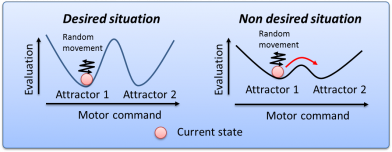

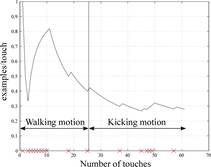

Infants’ behavior in which they move their body at random is called motor babbling and considered to provide them with recognition of the relationships among their body parts and muscle movements. If they have to learn about such a movement for each muscle, the learning space become so enormous that the learning time exponentially increases. However, it is not the case. Here, we focused on the mechanism of yuragi (biological fluctuation) as one of the possible mechanism for reducing learning space. In the yuragi mechanism, the random exploration based on noise and the local one based on attractors are selected according to the evaluation of the current state, which is called activity (Fig.2). It is considered that such mechanism to utilize the noise and attractors for efficiently exploring more suitable states in biological system. Based on this mechanism, we proposed a method of random exploration biased by the evaluation of the current state and apply it for M3-Neony to test whether it can acquire crawling motion [Fabio09][Fabio10]. In this experiment, the robot was assumed to move its arms and legs periodically and explore the space of difference of phases among these four legs or arms. Multiple attractors were displaced on the space and the speed of forward locomotion were adopted as the evaluation measure. Since it can not go forward with inappropriate differences of phase and then the activities decrease, it started to randomly explore more suitable phase differences. Once it finds phase differences which enable its forward locomotion (see Fig.3), it maintain the found

differences to keep the crawling motion. Utilizing body attractor such as easily producible movement enables motor learning of a robot with many degrees of freedom in lower dimensional space. We believe that this study reveal one of the clues of the infant mechanism of motor learning based on exploratory movements.

Fig.2 Yuragi mechanism

Fig. 3 Acquisition of crawling movement based on biased random walk

Dynamic motion based on other’s help

Other’s supports are considered to help motor learning by humanoid robots with a complex body involving many degree of freedom. We see that infants whose motor capabilities are immature learns target motion through being helped by other persons. Here, synchronization between an infant and the caregiver is considered to be important for the infant to utilize the support of the caregiver for motor learning. We cope with the problems of motor learning through physical interaction considering that development of the capability to synchronize with others is the basis of development of communication capability.

Since we assume that infants utilize their flexible body to effectively derive other’s help for accomplishing motions, we propose a control method for CB2 that has flexible joints to accomplish motion based on person’s physical support.

In this method, to utilize flexible body, the robot regarded some postures, which were considered to be via-points for the movement, as the target ones and then accomplish motions by switching them according to the other’s help [Ikemoto08h].

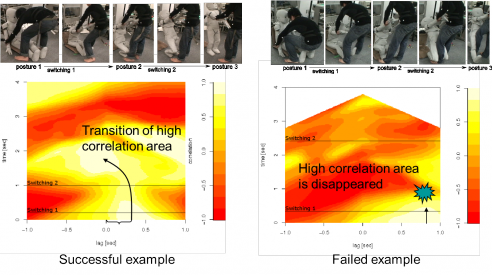

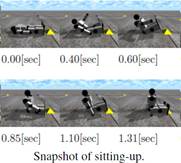

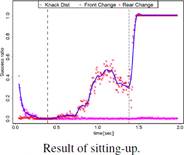

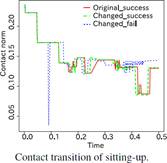

Here, we coped with a motion appeared in Fig. 4 where a person hold the both arms of CB2 to make it sit-up. We run the experiments where several subjects were asked to make it sit up and found that whether they succeeded in helping motion based on correlation between changes in the postures of CB2 and the caregiver (see Fig.5). Furthermore, we found that the timing of switching the target posture was important for utilizing the physical help. We then proposed the method to learn the timing of switching target postures according to the goodness (good or bad) of the achieved motions evaluated by the caregivers. Experimental results showed that the proposed method focusing on timing enable CB2 to improve its motion through about thirty trials. Furthermore, analysis of the motion of the caregiver showed that the caregivers also adapted their helping motion to the robot, in other words mutual adaptation between CB2 and the caregivers [Ikemoto09].

Fig.4 Sitting up motion with other’s help. Posture 2 and 3 are target postures.

Fig. 5 Analysis of the motion with other’s help from the viewpoint of mutual correlation of changes in postures.

Fig.6 Improvement of sitting up motion through learning timings (top: before learning, bottom: after learning)

Here, we focused on “knack” that is the influential state for succeeding in motion during motor learning by CB2. For motor learning focusing on knacks, it is necessary to represent them by using general variables which are not specific for the target robot. Here, we propose a method to represent features of motion by using motor commands and evaluation. Probabilistic distributions reflecting the robot task are acquired without a priori knowledge, which enable to estimate the important states of motion for achieving motions. We confirm the validity of the proposed method by using the simulator for motions of raising upper-body, rolling-over, and forward-step (Fig.7 left and center). In the simulation, furthermore, we saw that the motor states estimated here largely depends on the situation of contact between the robot body and environment. It was implied that the condition of motion dynamics is easily changing in such states and that the proposed method could automatically find the boundary of the dynamical changes.

Fig. 7 Sitting-up motion (left), “knack” state in sitting-up motion (center), and physical meaning of them (right)

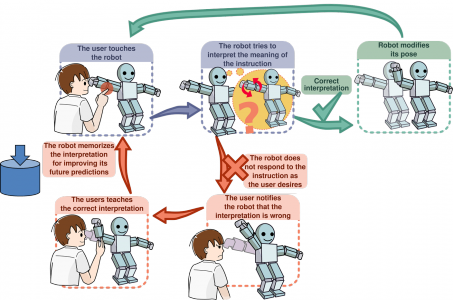

Furthermore, we coped with a problem of motor instruction by other’s touching (Teaching by Touching) as a study related to motor learning involving physical interaction. This issue relates to a study to explore the mechanism for understanding other’s intention. Here, we developed a method for acquiring the target motion through understanding the intention of the supervisor’s instruction of touching [Fabio09][Fabio10]. The robot produces a motion, which is probably intended by the supervisor, by modifying its own motion according to the touching instructions. It constructs a mapping from tactile information to how to modify its motion (ex. when its right arm is touched, the supervisor might intends to ask it to move its whole right arm). This mapping is learned by supervised learning method through it generates its motions. Experiment using computer simulation with a robot agent of computer graphics showed that it could learn the intention of touching through generating motions. This experiment also showed that human instructors touch the robot’s tactile sensors for moving not only in a simple way such as moving a specific joint but also in more complex (or abstract) way (ex. he or she might touch its head to make its lower limb bend for squatting position. It was argued that child development of understanding other’s intention was promoted in the similar situation where the internal state of the instructor’s hidden state must be estimated. Furthermore, the proposed method was applied for the robot M3-Neony so that it generates motion through learning intentions of touching.

The experiment where plural subjects were asked to instruct motions for the real robot also showed that there were some common patterns among subjects, in other words, mapping between touches and joints to be moved. The proposed method is basically only for modifying motion but for exploring. To consider exploratory movement, we represented robot motions by using a CPG network. In the computer simulation, it was shown that parameters of CPG for the target motion could be explored based on instructions. It was argued that the robot’s exploratory movement gives the instructor redundancy to interpret its intention, which makes enable to study mutual understanding of intentions.

Fig.8 The problem of teaching by touching and model of understanding instructor’s intention

Fig.9 Learning residual for estimating instructor’s intention and experiment with M3-Neony

Modeling interpersonal cognition based on mutual responses

Face-to-face interaction is the process where participants of interaction responds to each other. Therefore, how humans recognize other’s response and how humans respond to others are considered to be the basic problems on modeling interpersonal cognition. We focused on the gaze, which is a representative modality reflecting social relationships, and tried to verify hypothesis on the relationship between responsiveness and interpersonal cognition through experiments of gaze communication between human and robot. Practically, it was conjectured that humans does not feel robot’s sociality from gaze response [Yoshikawa07] or mimicry neck movement [Shimada08] with too small or too large latencies.

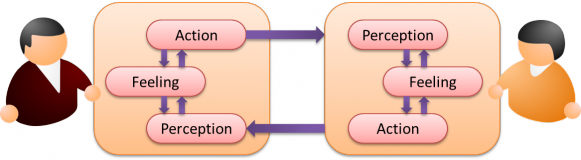

In this social hypothesis, it was focused how humans recognize perceived response from others i.e., half phenomena of face-to-face interaction. Then, we extend this hypothesis assuming that a person and a robot responds to each other via gaze. In other words, not only recognition of other’s response but also own action towards the other were considered for modeling one’s recognition of the other. This hypothesis imply that human’s feeling of communication with another agent is a bidirectional phenomena. It is conjectured that it originates not only from perception of being properly responded to by the other but also from speculation based on own action to respond to the other. Therefore, it is considered not only that the experiences of such perception or action causes the feeling but also that the feeling bias perception and action to keep communicating. We call it mutually excitation hypothesis (see Fig. 10). A part of the hypothesis, that is bidirectional relationship between action and feeling, was tested by interaction experiment using gaze responsiveness [Yoshikawa08a] (see Fig. 11) and by the interaction experiment using responsiveness of approaching. Furthermore, we examined the validity of the bidirectional hypothesis between action and feeling through studying the relationship between unconscious mimicry movement and feeling of communication in the conversation experiment with an android robot [Lee08]. We also examined the validity of another part of the bidirectional hypothesis, that is one between action and perception, in the experiment of conversation with a computer where participants were asked to report the response timings of the computer where the feeling of communication was controlled by selecting contents to be uttered by the computer

Fig. 10 Mutually excitation model of interpersonal cognition

Fig. 11 Interaction experiment focusing on the responsiveness of gaze

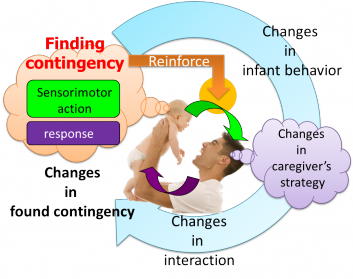

Social behavior learning based on other’s contingency

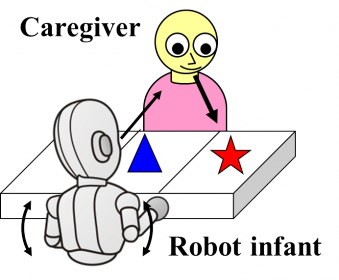

To understand cognitive developmental process through face-to-face interaction between human infant and other, it is necessary to model both how multiple social behavior can be acquired and how the interaction with the caregiver influence on it, in other words, the whole dynamics of the social system. However, since a number of parameters have to be considered to model such dynamics, the approach of the computer simulation of cognitive developmental process sounds feasible approach where we can iterate the process of model construction, evaluation, and modification. In 2007, it was considered that reproducing causality is essential for gradual development of social behavior. We conducted a computer simulation for testing that reproducing causality lead a robot agent to acquire behavior related to joint attention or social referencing (seeFig.12) [Sumioka08]. From another point of view to consider social

development, we argued capabilities to acquire word meaning and view transformation might be a basis of social development [Mayer08].

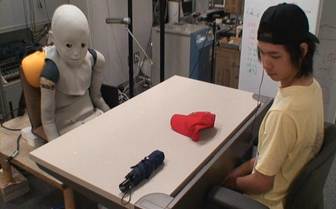

In 2008, we kept examining and modifying the model for social development of infant using the computer simulation. Here, we coped with the multiple processes of social behavior. Assuming that joint attention can be performed by multimodal functions such as for gaze following or word comprehension, we ran the experiment to make CB2 acquire joint attention (see Fig. 13) and examine the validity of a model that enable mutually facilitative learning of these functions [Yoshikawa08]. We also extend the model for acquiring capabilities for joint attention or social referencing through reproducing causalities to perform turn-taking between human and robot by using motion trigger [Sumioka08]. It was examined in the experiment with CB2 and argued from the viewpoint of biological plausibility [Sumioka08].

Fig.12 Model of incremental acquisition of social behavior based on reproducing causality and environment of computer simulation for modeling the process to acquire behavior related to joint attention

Fig.13 Experiment of learning joint attention based on gaze following and word comprehension

After 2009, we focused on the mutually facilitation between development of gaze following and word comprehension. We argued the validity of the model by considering the development on sensitivities of perception for other’s gaze, face and voice as well as precision in motion control. As another example of mutually facilitative process, we also coped with the simultaneous processes of development of word comprehension and vocal imitation. A learning mechanism of mapping among three representation related to these development such as representation of object, other’s voice, and own voice. It integrates multiple signals obtained through propagation via different paths based on what we call subjective consistency to obtain more reliable signal for learning mapping. The proposed method could enable acquisition of correct mapping for word comprehension and vocal imitation even under condition where the caregiver show correct correspondences by imitating or labeling behavior only in low frequency [Sasamoto09].

Furthermore, we extended the developmental model based on reproducing causality by considering to make agent aware of the history of own action. Computer simulation with the extended model showed that the simulated agent looked acquiring object permanence before acquiring social functions such as joint attention, which was considered to be more plausible. On the other hand, causality could be considered to be preferred by not only infant but also the caregivers. Furthermore, it might be more appropriate to regard that infant development is guided by the interaction of the preferences of them. To model the interaction of these preference, we coped with the mutually imitative interaction between infant agent and caregiver agent through which the infant learns correspondence ob body parts based on the contingency observed in the interaction. Here, we focused on the predictability as one aspect of preference for causality in the computer simulation. As a result, we could observe a sort of pattern of development like human infant, that is transition from self-imitation to other-imitation, when the agents preferred predictable events but lose its preference if their predictability were too obvious [Minato10]. As synergistic intelligence mechanism group had argued from the viewpoint of brain science, child preference to causality or predictability is considered to be an important building block for prediction or mental simulation based on ego-centric model. Furthermore, the synergistic intelligence mechanism group conjectured that density changes of neuro-transmitter might be related to the development of such preferences. Integrating the calculation process on such transmitters into our model should be considered in the future.

Although it was difficult to verify the validity only with the computer simulation, we succeeded in applying the developmental model based on reproducing causality for a real robot and making it acquire understanding the concept of object permanence, gaze following behavior, and such (Fig. 14). To verify the validity of the caregiver model, in the future, we should use a robot that is capable of interacting with human caregiver more natural way such as M3-Kindy, which posses not only sensing and action capabilities for learning but also functions of rich facial expression and locomotion. It is expected to enable us to cope with long term interaction where human caregiver would be naturally incorporated. Furthermore, we have to cope with the basic problem of segmenting the necessary information for learning in such unconstrained interactions. With such research environment, we would be able to consider what kinds of facial expression or other behavior is necessary for making caregiver guide robot development. Simultaneous processes of learning social function and social interaction should be considered.

Fig.14 Development experiment in the real world through reproducing causality

Modeling interpersonal cognition via others

Human child has chances to be involved in social situation with more than two people from birth. To understand social development of children, we have to focus not only on dyad interaction between a child and an adult but also on the social interaction with many persons. In 2007, we started to model human mechanism to recognize the interaction between others. In the subject experiment, we showed that the variability of actions influences the subject’s cognition on interaction between human and android [Minato08].

In 2008, we extend the research of gaze interaction in a dyad situation to one in triad situation in order to model social dynamics of interpersonal cognition. We considered that human interpersonal cognition of a certain person depends not only on the relationship with the target person but also on the relationship between the target person and other person who are (potentially) another communication partner (see Fig. 15). In the psychological experiment of triad communication involving one robot, we examined that nonverbal modalities such as gaze contribute on it (see Fig.16) [Shimada10].

Fig.15 Interpersonal cognition in social interaction

Fig.16 Triad communication experiment involving an android

After 2009, we ran preliminary experiment how human recognize interaction between two robots and found that it was influenced by how one responded to the other and how much one imitated the other. On the other hand, we extend the model of preference formation in the dyad situation based on mutual excitation to build a computational model of social dynamics of preference formation through gaze interaction. We ran computer simulation assuming that three agent of a family, that is a child agent and his or her parents, interact with each other only via gaze (Fig. 17). We showed that the proposed model could produce the state that was considered to reproduce the balanced state that is predicted by a hypothesis in social psychology (Heider’s balance theory) and that the model produce the transition resembling “kids-as-bond” phenomena [Yoshikawa09].

Fig.17 Computer simulation of dynamics of preference formation

Autism is a developmental disorder that produces a symptom of suffering from incomplete communication capability. Autistic patients shows behavior as if they ignore a communication partner as well as the third person, which is one reason making it difficult to reveal the mechanism of autism. However, autistic children are said to show interests in robots and proto social behavior towards them. We considered to use group type communication robots, M3-Synchy, for making autistic children experience of interaction among more than two people. It is expected to provide experimental environment to understand autism and to be hopefully utilized for developing training program of communication for autistic children. As the first step, we started to run a pilot experiment of the interaction between robots and children with aspger syndrome who can make verbal report about their impression toward robots in a hospital for such patients. We made them to interact with two robot that is programmed to respond to the children’s action and examine their impression. There were the cases where some autistic children regarded M3-Synchy as a communication partner. In the future, what kinds of response make autistic children feel social relationship with robots should be analyzed in relation to patients’ symptoms.

In the interaction involving more than two agents, dynamic formation of relationships among participants is expected. M3-Synchy is a robot that has smaller body than humans and still maintains minimum functions for human communication. Therefore, it can be easily utilized for constructing interaction among many agents involving humans and multiple robots. Practically, two M3-Synchys were introduced to human-human conversation where each robot was held by either

human on his or her lap. They are controlled to produce nodding behavior in response to human’s utterance and gaze movement to look at human. Nodding by the interlocutor is known to work for promoting conversation probably because it makes the interlocutor look hearing the talk. Robot nodding was expected to promote the conversation maybe because the person confuse its nodding as the interlocutor’s one. In the experiment, a subject person talked to an experimenter who did not nod nor smile during the subject talking. We observed that nodding by M3-Synchy cause the subject’s feeling as if the experimenter listened to the subject talk (see Fig. 18) as well as feeling as if the experimenter was closer to the subject. Further design of synchronization among humans and multi robots is necessary to maximize the effect of promoting human-human communication and would provide novel insights to synthetically modeling development of social

dynamics.

Fig.18 Experiment to promote human-human communication by introducing M3-Synchy